Databricks Foreign Catalogs

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

What should be done when one’s Terraform turns into technical debt?

Cloud computing has fundamentally transformed how computer software is run. Most software companies no longer purchase processors, hard drives or other flavors of silicon that one might associate with computers. Instead, many companies outsource their hardware needs to public cloud providers. These providers, led by Amazon Web Services (AWS) and Microsoft Azure, pool hardware together and sell it in the form of on-demand virtual machines, cloud storage and other services. This consolidation of computing has lowered the barrier to entry for engineers to build reliable, performant and secure software solutions.

Infrastructure as Code (IaC) has emerged alongside cloud computing as a crucial approach in modern software development operations. It refers to the practice of managing infrastructure by using code and automation rather than manual processes. This approach offers a key advantage: IaC enables hardware to be treated as software. With IaC, processors and hard drives can be version-controlled, tested, and deployed using the same practices as software development. This leads to greater hardware and software reliability and enables teams to quickly adapt to changing requirements.

While cloud computing and IaC are amazing tools, not every software engineer has the bandwidth to become an expert in them. AWS offers over 200 services ranging from simple storage to text-to-speech. Thankfully, abstraction is a powerful concept that engineers can fall back on. Just as not every Python developer needs to understand binary machine code, not every application developer needs to be an expert in AWS.

There are many organizational approaches to abstracting cloud computing, but one that is rightfully gaining traction is Platform Engineering. In this paradigm, teams are dedicated to creating and maintaining the foundational platforms and infrastructure that support software applications and services. Platform engineers are responsible for a wide range of tasks, including setting up and maintaining cloud infrastructure, designing and implementing deployment pipelines, managing container orchestration systems, and ensuring the reliability and scalability of the platform.

Rearc recently counseled a well-established enterprise platform team. Let's call them the Orange team. The Orange team maintained a self-service website for deploying applications in Kubernetes. Using this website, multiple application teams could roll out their containerized apps to the cloud with just a few button clicks. The Orange team leveraged Terraform (a popular IaC tool) behind the scenes to deploy and maintain the resulting cloud infrastructure.

The Orange team was quite successful, and over time the number of application teams it supported grew. By the time Rearc entered the scene, the Orange team was managing virtual private clouds, Kubernetes clusters, load balancers and more. However, over time the Orange team accrued technical debt and was unable to dedicate bandwidth to address it.

Technical debt is a term that software developers should be familiar with. It is a normal and inevitable side effect of software engineering. Software engineers “borrow” against code quality by making sacrifices, taking short cuts, or using workarounds to meet delivery deadlines. Technical debt is an especially succinct analogy because, like real debt, technical debt tends to accumulate interest. The longer technical debt is left untreated, the more complicated and time-consuming it is to fix.

As the Orange team aged and its clientele grew, so too did its technical debt. The team managed over 1,500 Terraform deployments; some used Terraform versions as old as 0.11. The Orange team could not use modern security tools because of compatibility issues with their outdated Terraform. They also struggled to roll out enhancements to the platform because of the overwhelming scale. It was past time to start making payments on their debt.

First and foremost, the Orange team needed to upgrade their Terraform code. Terraform version 0.11 was released back in 2017 and official support had long since ended. This meant that the Orange team was not receiving security updates, enhancements or bug fixes to their IaC tool. Furthermore, the security scanning tool that the Orange team wanted to leverage (Open Policy Agent) did not support scanning such outdated Terraform code.

Upgrading 1,500 deployments of outdated Terraform was no simple task. The scale and age of the Terraform code made a manual approach prohibitively time-consuming and tedious. Moreover, automation was not as straightforward as it might seem. The Orange team owned a dozen different Terraform modules, each with varying degrees of support for newer Terraform versions. Due to these differences, each upgrade required different steps to complete. Additionally, upgrades failed for many different reasons, including due to network timeouts. Even if the Orange team succeeded in upgrading their Terraform code, new versions of Terraform continued to be released. The Orange team needed a capable automation tool that could be used again in the future.

Requirements for a Terraform Upgrading Tool:

With the above requirements in mind, Rearc conducted an audit of available Terraform tools.

Terraform Cloud is a feature-rich service offering that manages cloud resources, variables, secrets, and more. However, using any software as a service is a hurdle for enterprises with strong data control and regulatory requirements. Terraform Cloud was no exception; it had requirements around state file storage and access that conflicted with the Orange team's practices. Furthermore, Terraform Cloud is an expensive service when used at scale.

Atlantis is a great open-source project for Terraform pull-request automation. However, it faces scalability issues and was too niche to address the Orange team's needs.

Spacelift is a powerful Continuous Integration / Continuous Deployment (CI/CD) tool for Terraform. It can combine Terraform deployments into useful logical groups called "Stacks". While it could have benefited the Orange team, it did not address the team's immediate needs for performing Terraform upgrades. This is especially true given that the Orange team already used Jenkins as their CI/CD tool.

Argo Workflows is a workflow engine that showed promise because of its flexibility and scalability. The Orange team could have written workflows to automate Terraform upgrades, but Argo is not very good at tracking workflows at scale. Furthermore, every component of an Argo Workflow needs to run inside a container on Kubernetes—a system that's great for scalability but that adds complexity.

All these tools are powerful and mature in their own right, but none of them met every requirement of the Orange team.

One solution to the shortcomings of the above tools is Airflow. Airflow is heavily marketed as an extract, transform and load (ETL) data integration tool, but let's break down what it can do:

Thus, Airflow is not just purpose-built for data applications. It is a job scheduler on steroids. This is exactly what the Orange team needed: a configurable and scalable automation tool that could handle thousands of administrative tasks.

The design process chronicled above led to the creation of Bronco. Bronco is a task and workflow codebase, built on Airflow, designed to automate the administrative duties of a platform team. Bronco is a game changer in the space of platform engineering, because it enables platform teams to far exceed previous limits on their scale and development velocity. Bronco is an open source project built and maintained by Rearc. The source code is available here.

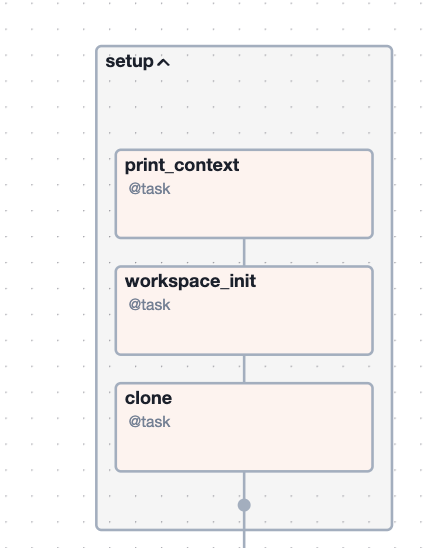

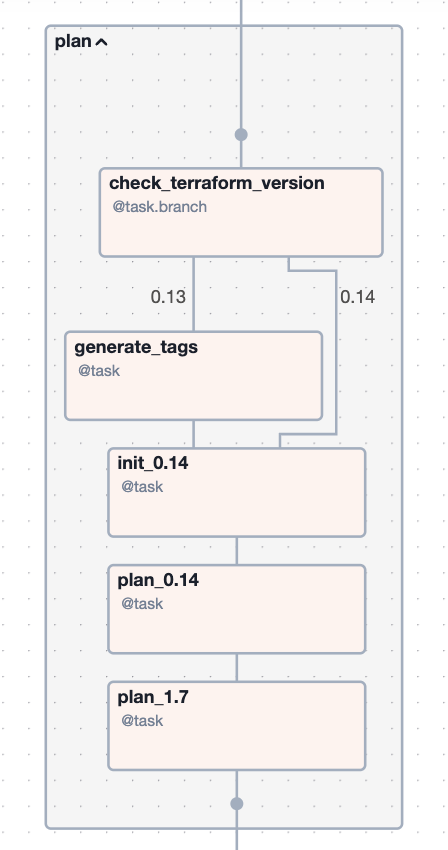

Bronco uses Airflow Directed Acyclic Graphs (DAGs) to define administrative workflows. DAGs can be thought of as flowcharts without any loops. Their structure allows sophisticated workflows to be codified into discrete tasks and decision points.

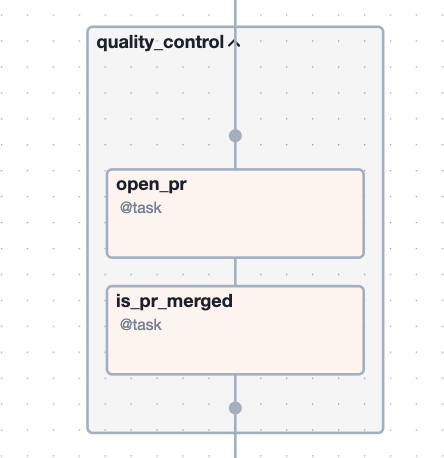

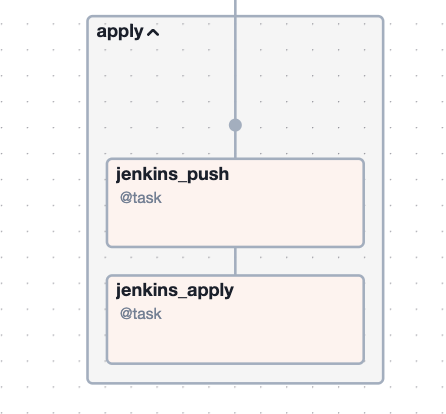

Above is Airflow's Graph view of a Bronco DAG. It is read from top to bottom, with each box representing a task and each arrow representing a dependency. Flexibility is the key takeaway here. Bronco tasks are written in Python (though other operators may be used) and thus their capabilities are nearly limitless. Additionally, tasks can be strung together to represent all sorts of complex, conditional or branching workflows.

The Bronco DAG illustrated above was used by the Orange team to upgrade some of their Terraform deployments. These deployments had varying Terraform versions, so the DAG was equipped with branching logic to handle these differences. The DAG also had a quality control stage, which required a GitHub pull request. Lastly, the DAG applied the Terraform upgrade using the Orange team's Jenkins server. Applying Terraform is often the last step to complete a Terraform upgrade.

DAGs like this one were instrumental in automating Terraform upgrades, but the real power of Bronco is how well it handles concurrency.

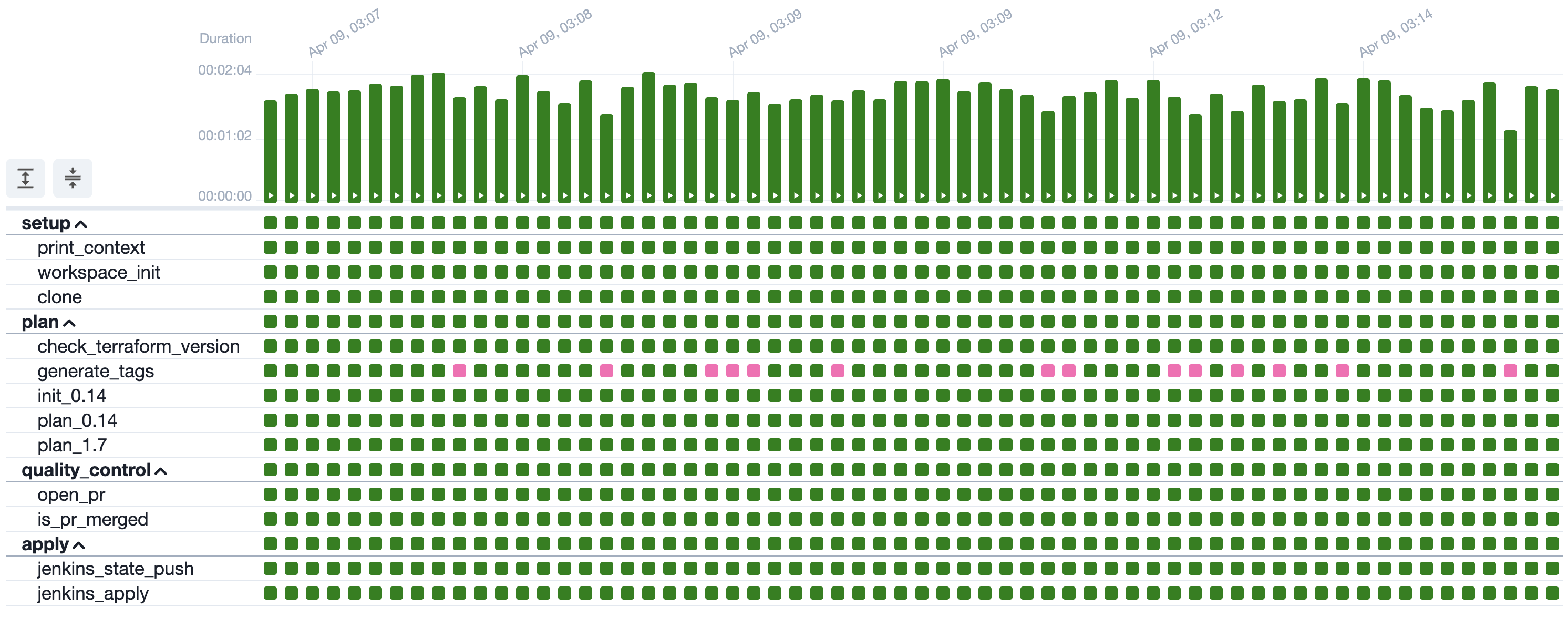

Automating a Terraform upgrade is a commendable achievement, but even automated upgrades take time. A single Bronco DAG can take 15 minutes to run, longer if cloud infrastructure needs to be updated or replaced. With over 1,500 Terraform deployments needing upgrades, that time adds up. All platform teams want to minimize infrastructure maintenance periods. Thankfully, operating at scale is where Bronco shines. The same Bronco DAG discussed above is shown below in Airflow's Grid view.

The Grid view shows all current and previous executions of the Bronco DAG. The horizontal axis depicts the executions (one per Terraform deployment), while the vertical axis shows each task that comprises the DAG. Each colored square represents the execution status of a single task, with green indicating success and pink representing a skipped task. This image alone shows the execution of 50 Terraform deployment upgrades, consisting of over 500 tasks!

Bronco can execute dozens of upgrades simultaneously. The hidden beauty of the Grid view is that it tracks all concurrent upgrades in real time. Every task's status and logs are accessible at a glance or with the click of a button. Any failures show up as a bright red square, and tasks that failed can be rerun from the point of failure without needing to rerun the entire DAG. The capabilities of the Grid view are invaluable.

Bronco empowered the Orange team to automate Terraform upgrades at a massive scale. Hundreds of upgrades were performed in a single day. The team made quick work of their formerly foreboding technical debt. But it was apparent that Bronco was good for more than just Terraform upgrades.

The Orange team discovered that the benefits of Bronco were compounding. Bronco tasks can be reused if constructed thoughtfully. As more Terraform projects were upgraded, Bronco's codebase grew. It became hard to match the automation and reusability Bronco provided.

The Orange team had other administrative responsibilities besides upgrading Terraform versions. The impetus for upgrading Terraform in the first place was a desire for cloud vulnerability scanning. After the Terraform upgrades were completed, cloud vulnerability scanning was implemented with a Bronco DAG. Scans surfaced vulnerabilities in the Terraform configuration of many Kubernetes workers. The solution, to move EKS workers into a private subnet, was achieved with a Bronco DAG.

Administrative work is inevitable when dealing with Terraform at scale. The Orange team routinely automated this work with Bronco.

Airflow is typically marketed as an ETL tool for data engineers, but its practicality goes well beyond that. Bronco is a tool built on this idea. It is a powerful general-purpose workflow automation tool particularly well-suited for platform teams that manage cloud infrastructure at scale.

Read more about the latest and greatest work Rearc has been up to.

A deep dive into Databricks Foreign Catalogs for Glue Iceberg table access

Learn how we built Rearc-Cast, an automated system that creates podcasts, generates social summaries, and recommends relevant content.

Overview of the Talent Pipeline Analysis Rippling app

An AI-powered bias detection program, which takes inputted PDFs and extracts the different forms of bias found (narrative, sentiment, slant, coverage depth, etc.).

Tell us more about your custom needs.

We’ll get back to you, really fast

Kick-off meeting